25/06/2025

Creating glossaries for MT and AI: why less is sometimes more

Anyone who uses machine translation (MT) or AI systems for translation is familiar with this problem: AI sometimes translates “Bolzen” as “bolt”, sometimes as “stud” – depending on the day of the week. This results in a lot of post-editing being required, which quickly eats up the cost savings of AI. Glossaries have proven to be a tried-and-tested tool for minimising these errors. In our projects, we have reduced terminology corrections by more than half by integrating a glossary. This may lead people to assume that the more terminology you specify, the greater the benefit, but that is not true. An overcrowded glossary may even lead to more errors. The sheer volume overwhelms the system and, in the end, individual terms overshadow the desired end result. As is so often the case, the familiar principle also applies to glossaries: less is sometimes more.

Why glossaries are indispensable

Glossaries are a real game changer in helping to increase quality and decrease the work required later down the line. Our analyses from post-editing projects show that terminology changes account for between 14 and 45 per cent of all corrections. Therefore, they account for considerable time and costs.

The great advantage of glossaries is that your desired terminology is integrated into generic systems – i.e. into MT and AI systems that have never worked with your company-specific data before. This makes glossaries the perfect middle ground between complex AI training and time-consuming post-editing.

Above, we said that 14 to 45 per cent of corrections relate to terminology. There are many factors to explain this wide range:

- Scope of the specified terminology: The more specified terms there are, the greater the potential effort in correcting errors

- Domain: In highly specialised areas, generic MT systems often do not adequately implement the specialised terminology

- Language combination: There is significantly more training material for some languages than for others

- MT system: Tests repeatedly show that different systems deliver completely different results for the same technical terms

- Degree of standardisation: Does your company use standard industry terminology or its own terms?

The influence of glossary specifications: a double-edged sword

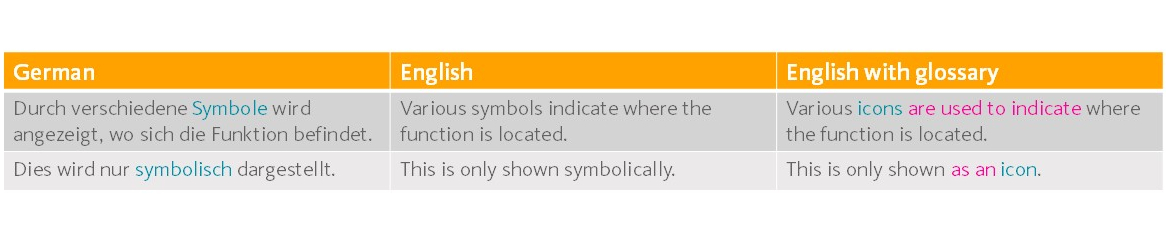

However, glossaries are not a sure-fire success. What you put in has an enormous influence on what comes out. The German word “Symbol”, for example, can be translated as “symbol” or “icon” depending on the context.

Machine pre-translation without glossary (centre) and with the specified term “Symbol = icon” (right). The changes to the rest of the sentence are highlighted in magenta. (Source: oneword GmbH)

Neural MT systems work based on word probabilities, meaning that, for each word, they calculate which other words it occurred within the training data used. Each glossary entry changes these probabilities, which means that the rest of the sentence can change as well as the technical term itself. This change can be positive if it results in greater accuracy or a better collocation, but it can be negative if a given term generalises the rest of the sentence too much, for example.

The right equipment makes all the difference

You basically have two technical options for creating a glossary: you can prepare a file and give it to the system (usually .csv, .xlsx or .tbx) or the MT system can access your terminology database directly. The latter usually takes place within CAT tools.

The correct allocation of terms is crucial for both variants. The most sensible approach is a ‘n:1′ allocation, where all terms in the source language (including permitted and prohibited terms) are assigned to a preferred term in the target language. This ensures that non-preferred terms in the source text are also recognised and translated correctly.

However, language is not always unambiguous. Therefore, in terminology databases, there are usually many cases in which it is not possible to assign the terms clearly or in which, depending on the context, more than one translation would have to be given for a term. However, ambiguities are stumbling blocks for the systems’ glossary functions, with the technical implementation in the background varying greatly. Some systems use the first option in the list by default when there are ambiguities, some use the last option by default. Others proceed alphabetically or simply ignore ambiguous terminology completely. As a user, you usually have no control over these technical details, which is why it is all the more important to prepare your data in the best way possible.

Avoiding typical sources of error

In addition to ambiguities, there are other stumbling blocks lurking in the construction of glossaries that can negate the quality enhancement. You should therefore avoid the following mistakes at all costs:

- Content errors: Incorrect terminology also ends up being incorrect in the machine translation

- Lack of differentiation: Different concepts are translated in the same way

- Out-of-context terminology: GUI texts or foreign terminology in the database

- Use of upper and lower case: Special product spellings can cause problems

- Compound words: A particular challenge, as MT systems often ignore hyphens

Practical solutions for better glossaries

To exploit the full potential of glossaries, the following approaches have proven successful in practice:

1. Check for duplicates

Search for duplicate or multiple terms in both the source and target languages. You will find related entries and terms that are spelt similarly or identically but that have different meanings. Then check: Is there a meaning that is correct in the majority of cases? Then add it to the glossary. If two meanings are equally likely, it is better to exclude both.

2. Carry out a database analysis

Not everything in your terminology database actually appears in your texts. A database analysis of your terminology database shows which terms are used and how frequently. This analysis helps with prioritisation, not only for glossaries, but for all terminology work. Mark active entries so that you can filter them later.

3. Identify potential for cleaning up data

To allocate terminology unambiguously, you need clear usage information. A script-based analysis shows where this is missing or ambiguous (several preferred terms per language). After cleaning up the data, you can automatically extract glossaries – ideally combined with the reduced database from the database analysis.

4. Bottom-up approach with feedback loop

Start with a basic database and add to it step by step. Use feedback from the post-editing process: Which glossary entries lead to errors? Which terms are missing? Sometimes it can even make sense to include general language words if these are frequently translated incorrectly by the MT system. However, such entries have no place in the terminology database.

Conclusion: more quality through lower quantity

Glossaries are an excellent tool for improving the quality of MT- and AI-generated translations. With a 62 per cent reduction in terminology corrections, the figures from our day-to-day MTPE work speak for themselves. The impact of these glossaries is enormous – for better or for worse.

Terminology databases that are unchecked or too extensive quickly lead to errors and additional work. Careful analysis, the targeted selection of relevant terms and continually optimising glossaries are key to better results.

Would you like to optimise your glossaries for MT and AI? Our experts at oneword will be happy to help you analyse your terminology database and create effective glossaries. We’ll be happy to provide a consultation.

8 good reasons to choose oneword.

Learn more about what we do and what sets us apart from traditional translation agencies.

We explain 8 good reasons and more to choose oneword for a successful partnership.