Machine translation

28/09/2022

Error sources in machine translation: How the algorithm reproduces unwanted gender roles

Teachers at primary schools are female, at universities male. In hospitals, the nursing staff is female, the medics are male. Women are pretty, men are successful. And German government officials are female, even if they are called Olaf. Welcome to the machine.

The success story of neural machine translation (NMT) may only be a few years old, but it is hard to imagine everyday life without it – and it is impressive. The results produced by the machines are often equally impressive: translations read fluently and coherently in many languages and come across as linguistically correct and idiomatic. But just because it is linguistically correct does not mean that the content is correct or that it is politically correct. After all, those who rely on machine translation (MT) always run the risk of stumbling over traditional stereotypes and gender bias. The following examples show that there are always surprises in store.

A male Bundeskanzlerin?

16 years is a long time. And a lot of data. 16 years ago, the term “Federal Chancellor” (Bundeskanzler) needed a female form in German for the first time. In the meantime, the political landscape has changed and yet a tweet announcing Olaf Scholz’s visit to the Spanish head of government Pedro Sánchez was rendered by Twitter’s own translation function as “Female Federal Chancellor” (Bundeskanzlerin) Olaf Scholz:

It almost feels like we are back in the days when machine translation was more likely to amuse people with slip-ups like “God store the Queen” instead of “God save the Queen” (That Britain now has to get used to a king after seventy years of the Queen’s reign is another matter) Since then, machines have made a quantum leap and now produce convincing fluent and idiomatic results. So how can there still be a slip-up like this?

“We have always done it that way!”

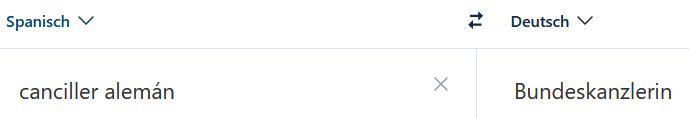

The well-known excuse against any form of change and innovation also explains many of MT’s mistakes. The Merkel era lasted 16 years, making it something that was almost always there according to neural machine translation. DeepL, for example, was launched in 2017, Google Translate in 2006. This means that both systems have learnt from millions of data records that Germany has a female chancellor. And even though the masculine form “canciller” was used in Spanish, the context and the MT’s underlying training material ensure that what is expected is added here instead of translating what is actually written. Therefore, the same result is reached when the term is translated in isolation.

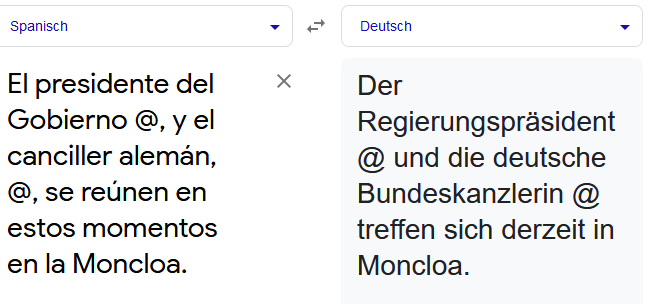

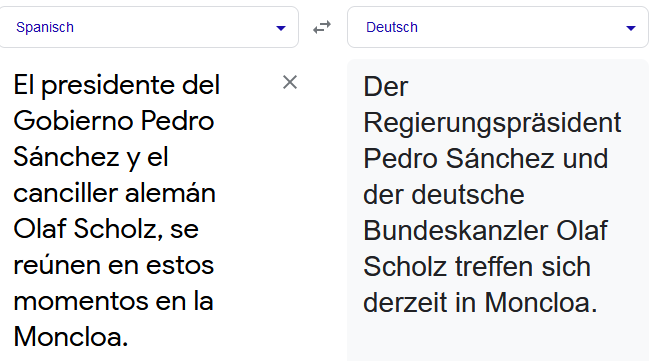

However, the tweet translation error is also encouraged when place holders are used instead of names. Olaf Scholz’s name is not written out. The tweet includes a link to his Twitter account instead. As soon as the name appears in the text, the MT systems recognise the male first name as the context within the sentence and correctly change the translation to “Male Federal Chancellor” (Bundeskanzler).

Using placeholders instead of names

Using full names

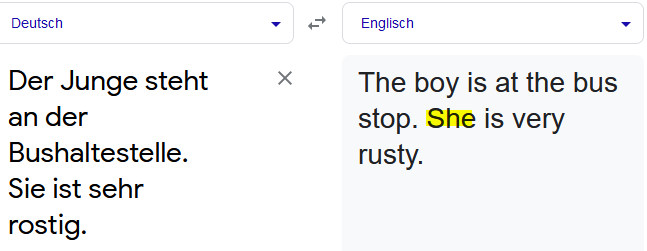

Speaking of context: even though references within a sentence in continuous text are now recognised and reproduced very well by the machines, references beyond sentence boundaries harbour a high potential for error. Or to put it another way: the machines recognise context only up to the end of the sentence. A good example of this:

The second sentence might refer to “she” in the machine translation, even though it should be referring to a bus stop mentioned in the previous sentence, and so should read “it” instead. The reason that the references are missed is often because the texts are divided into individual segments for them to be translated and each segment is then considered as an individual element. And even with single sentences, it can make a big difference to MT output whether the sentences are transmitted to the system one after the other or simultaneously with other sentences.

What gender references have to do with role patterns

However, it is not only references across sentence boundaries that are prone to errors when using a machine, but also references to people. In the above example, “she” is gender specific and is therefore translated by the machine as “she”. Some languages, however, do not have this gender-specificity at all, so statements can be formulated in a gender-neutral way.

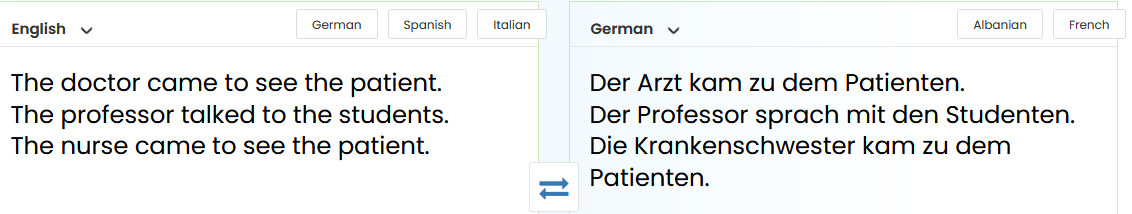

But what happens when a gender-neutral statement needs to be translated into a language that requires gender-specificity? It would be obvious that, as with gendering, the generic masculine is simply always used.

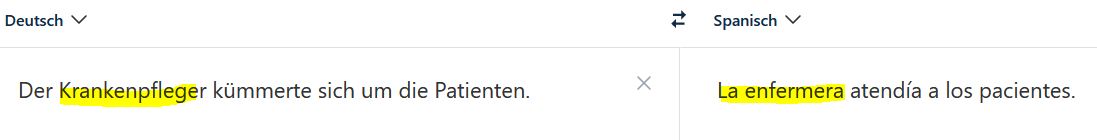

Unfortunately, both tests and practice show that gender bias often arises in these cases too. Characteristics, jobs and activities are translated by the machine based on the underlying training material, which often shows the extent of traditional gender roles and stereotypes. Gender-neutral job titles such as “doctor” and “professor” in English become masculine job titles in German. However, the equally gender-neutral job title “nurse” is given a female term in the translation.

This even happens when translating between gender-specific languages such as German and Spanish and therefore makes an assumption about gender within the job title. So, however you want to put it, nursing staff in hospitals seem to be fundamentally female for machine translation.

Machine translation can damage a company’s image

Since this bias not only includes job titles but also, depending on the language, ownership structures, activities and adjectives in general, stumbling blocks of this type lurk in many texts. In all communications, but especially in corporate communications, it is important to avoid them in order not to offend employees and customers or damage your image. After all, even when a company’s diverse and open self-image is supported through gendering in the source language, the machine translation then brings traditional role models to light unfiltered.

However, there are interesting approaches towards making machine translation fair. For example, the application Fairslator, which uses common online MT systems and detects linguistic ambiguities in the source text. For each of these passages, the tool then asks specific questions, such as whether the group is all-male, all-female or mixed-gender. In the case of mixed-gender groups, users can then choose whether to use a generic or a gendered form in the target language. So the application works in the same way a human translation would work: if anything is unclear, you must ask. In addition, these questions also raise awareness among text authors to formulate their texts more clearly in order to minimise enquiries from the outset.

So, in summary, there are two points: firstly, that artificial intelligence always needs the human mind as a counterpart. For machine translation, the human element is a post-editor, who checks and corrects the entire output and asks questions if there are any ambiguities. And, secondly, perhaps MT systems use the female term Bundeskanzlerin simply because they think it is a generic term and men are included in the term.

We also regularly present other typical sources of error in machine translation on LinkedIn in our “MTFundstück” section. Simply follow our company profile there.

8 good reasons to choose oneword.

Learn more about what we do and what sets us apart from traditional translation agencies.

We explain 8 good reasons and more to choose oneword for a successful partnership.